In computing, a cache system is a a high speed data storage that's mainly used to store a small subset of data optimizing for extremely fast retrieval times.

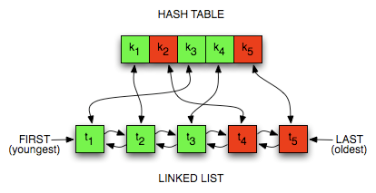

LRU cache is a caching system in which the least recently used items are evicted or removed from the cache storage when all the available pages/buckets are already filled and there is a need to insert a new item.

In this short article I will be going through how to design and implement a simple LRU cache data structure in Kotlin.

There are many approaches when implementing an LRU cache, in this tutorial, we will be using the LinkedHashMap which is a key value Map implementation on the JVM.

The main criteria of selection here is mostly around the notion of ordering and the ordering property is really important in this particular type of data structure we want to implement.

// Initial capacity : 0

// Load factor : 0.75f

// Ordering : Access ordering (true)

val map = LinkedHashMap<String, Any>(0, 0.75f, true)

Note that among the constructor properties listed above, what we care the most about is ordering, which in our case should be the most recently used items are placed in the front-end of the queue.

I Implementation

The implementation of the data structure will be subdivided in 3 main parts :

- APIs declarations

- Implementations for the APIs

- And finally we should be able to test the final structure.

I. 1. API declarations

The top level structure of our LRU cache will be a generic collection class. This provides more flexibility and static type checking safety at compile time.

// Note that the structure definition is a generic collection type

class LRUCache<T>(val maxSize: Int) {}

The overall API of the structure will cover the most important operations that can be done on a typical cache system, which are basically : put, get, delete and reset

class LRUCache<T>(val maxSize: Int) {

// Save a key/value pair in the cache

fun put(key: String, value: T)

// Remove an item corresponding to the key provided

fun delete(key: String)

// Retrieve data corresponding to the provided key

fun get(key: String): T? = internalCache.get(key)

// Remove everything in the cache

fun reset()

}I. 2. API implementation

Now that we have defined the APIs for our structure, it's time to go on with the implementation code.

- The first thing we'd want to implement is the internal cache that works as the backend behind the exposed APIs.

private val internalCache: MutableMap<String, T> = object : LinkedHashMap<String, T>(0, 0.75f, true) {

override fun removeEldestEntry(eldest: MutableMap.MutableEntry<String, T>?): Boolean {

return size > maxSize

}

}The internal cache structure is a LinkedHashMap as mentioned above and it has one very important property : Entries in the rear end of the queue are removed when all the available buckets have been occupied.

- Next step is implementing our API core functions

fun put(key: String, value: T) {

internalCache.put(key, value)

}

fun delete(key: String): Boolean {

return internalCache.remove(key) != null

}

fun reset() {

internalCache.clear()

}

fun get(key: String): T? {

return internalCache.get(key)

}

- After having implemented our core functions, it's time to add some useful helper functions in the mix.

// Synchronized access here is used to prevent a thread modifying the underlying structure while this method is being executed

fun size(): Long {

return synchronized(this) {

val snapshot = LinkedHashMap(internalCache)

snapshot.size.toLong()

}

}

// log the full internal cache state

fun dump() {

println(internalCache)

}

Now that our LRU cache implementation is complete, next step we will start testing our implementation.

In the mean time here's the complete implementation below

// Complete implementation of the LRUCache

class LRUCache<T>(val maxSize: Int) {

private val internalCache: MutableMap<String, T> = object : LinkedHashMap<String, T>(0, 0.75f, true) {

override fun removeEldestEntry(eldest: MutableMap.MutableEntry<String, T>?): Boolean {

return size > maxSize

}

}

fun put(key: String, value: T) {

internalCache.put(key, value)

}

fun delete(key: String): Boolean {

return internalCache.remove(key) != null

}

fun reset() {

internalCache.clear()

}

fun get(key: String): T? {

return internalCache.get(key)

}

fun size(): Long {

return synchronized(this) {

val snapshot = LinkedHashMap(internalCache)

snapshot.size.toLong()

}

}

fun dump() {

println(internalCache)

}

}

II. Testing

val cache = LRUCache<String>(2)

cache.put("1", "One")

cache.put("2", "Two")

cache.get("1")

cache.put("3", "Three")

assert(cache.get("1") == "One")

assert(cache.get("3") == "Three")

assert(cache.get("3") != "One")

// Expect this to be null since the backet with "2" was freed on max size (2)

// Since it was the least recently used

assert(cache.get("2") == null)

cache.dump() // {1=One, 3=Three}III. Conclusion

In this short tutorial we were able to implement a fairly simple LRU cache by navigating necessary notions about building this particular type of caching system.

The next steps here might be the introduction of ttl ( time to live) parameters and make it more robust for edge cases.

I hope y'all enjoyed this piece, if you have any question, don't hesitate to contact me.

Ciao !